Book

… the algorithm for the translation of sensor data into music control data is a major artistic area; the definition of these relationships is part of the composition of a piece. Here is where one defines the expression field for the performer, which is of great influence on how the piece will be perceived. —(Waisvisz; 1999)

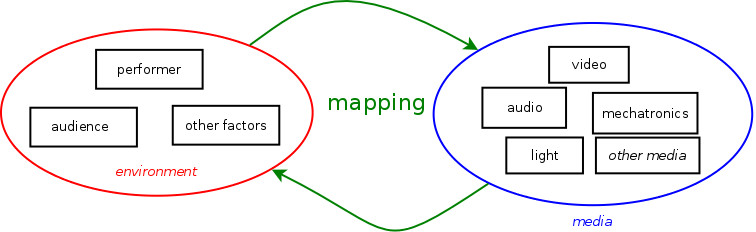

Artists have been making electronic musical instruments and interactive installations using sensors and computers for several decades now, yet there is still no book available that details the process, approaches and methods of mapping gestures captured by sensors to output media such as sound, light and visuals.

Tools for this kind of mapping keep evolving and a lot of knowledge is embedded in these tools. However, this knowledge is in most cases not documented outside of the implementation itself. So the question comes then how we can preserve knowledge of how a performance or instrument works if tools become obsolete, file formats are not accessible (or documented) and source code is unavailable? How can we learn from what other artists have made before us? How can artists communicate about their approaches to mapping if they are using different tools for doing so?

Just a Question of Mapping

Ins and outs of composing with realtime data

The book aims to give an overview of the process of mapping and common techniques that can be used in this. These methods will be described in a way that gives an artist a guideline of when to use the method and how to implement the method in the environment they work in. Examples of implementations of these methods will be provided seperate from the book in a repository to which readers of the book can contribute.

The book will have various parts:

- Introduction - framing the book in historical and esthetical context

- The Creation Process - how to start, build and develop a project involving mapping.

- The physical elements and their connections - details on sensors, circuits, communication protocols and the physical interface.

- Understanding data - ways of understanding and looking at data, and characteristics of data.

- Processing data - everything from range mapping, filtering, buttons, modes, mixing and splitting signals, to the output model

- Tuning data - calibration, dealing with noise, tuning parameters.

- Structure - time, memory, programming structure and compositional structure.

- Case studies - describes concrete works (instrument, performances and/or installations) based on interviews and in depth study of the implementation with references to the methods described elsewhere in the book. The case studies are interleaved between the parts.

Outline

Case Studies (interleaved between the parts)

- STEIM’s softwares Spider (Sensorlab), JunXion, LiSa and RoSa, and The Hands.

- Andi Otto’s Fello. (augmented instrument)

- Jeff Carey’s ctrlKey, a digital instrument consisting of a joystick, a keypad, pads & faders. (digital instrument)

- Cathy van Eck - composing for objects

- Roosna & Flak - dialogue between sound and movement

- Jaime del Val’s Metatopia - environments for metaformances and disalingment

1. Introduction

- Interactive art and the question of mapping

- The promise of interactive art

- From gestures to output media

- Describing and understanding interactive works

- Overview of this book

- Case studies

- Visual language

- Aesthetics in interactive, digital art

- Visual and media art

- Sound and music

- Dance

- Conclusion

2. The creation process

- The project

- Points of departure

- Defining the project

- Music

- Installation

- Dance

- Development process

- Concept to plan

- Prototype and test

- Consolidate

- Documentation

3. The physical elements and their connections

- Introduction to physicality

- Analysing the physicality

- Elements and connections

- Custom sensor interfaces

- Sensors

- Electronic circuits

- Microcontrollers

- Prototyping and building electronic circuits

- Commercial, off-the-shelf controllers

- Music controllers

- Game controllers

- Mobile phone apps

- Hacking off-the-shelf controllers

- Microphones and audio analysis

- Amplitude

- Pitch

- Spectral features

- Beat tracking

- Spatial methods

- Cameras and computer vision

- Analysis of the camera image

- Challenges in the physical world

- Three dimensional tracking

- Motion capture

- Machine learning algorithms for computer vision

- Elements for processing

- Computers

- Software & computation

- Preparing the computer for your project

- Output elements

- Sound

- Light

- Video and visuals

- Microcontrollers for output media

- Connections

- The cable

- The connection

- Creating custom connections

- Standard cables and connectors

- Communication protocols

- Binary numbers: bits and bytes

- Serial protocols

- Digital protocols used by sensors and actuators

- Communication over the serial port, or serial via USB

- HID

- MIDI

- OSC

- DMX

- Wireless communication

- Choosing communication protocols to use

- Bringing the elements together

- Making choices for elements

- Semantics of the interface

- Effort and ease of use

- Within and out of reach

4. Understanding data

- Types and roles of data

- What is data?

- The role of data

- Transmutation: from one type to another

- Continuous data streams within events

- Events within events

- Characteristics of data streams

- Timescale

- Range

- Resoluton of the amplitude

- Linearity

- Repeatability and reproducability

- Dimensionality

- Sampling and quantisation

- Quantisation

- Sampling of data

- Exploring data

- Seeing the data

- Analysing data

- Improving the data quality and optimisation

- Informing aesthetic choices

5. Processing data

- From one range to another

- Moving between ranges

- From your input value to a standardized range

- Unipolar and bipolar signals

- Inverting the range

- Out of range

- Splitting the range

- From a standardized range to a parameter range

- Steps and quantisation

- Segmenting the range

- Complex parameter spaces with multiple segmented ranges

- Nonlinear approaches

- Stages of range mapping

- Filtering and deriving data

- Calcuation methods for filters

- Ranges and thresholds

- Rectification: the absolute value

- Smoothing

- Changes

- Accumulation

- General filter equations

- Amplitude and bandwidth

- Peaks and onsets

- Power or energy

- Periodicity and spectral features

- Choosing filters for your gestures

- Buttons

- Buttons, keys and switches

- On and off, down and up

- Trigger

- Toggle

- Counting button presses

- Combinations

- Order of keypresses

- Timing

- Glitches: debouncing

- The panic button

- States and modes of behaviour

- States, modes, presets?

- States and conditions

- Modal control

- Mixing and splitting signals

- Mixing inputs to control one output parameter

- Splitting signals: generating multiple output parameters from one input signal

- Multiple inputs and multiple outputs: matrix approach

- Physical entanglement of input parameters

- Preset interpolation

- Implicit methods

- Output models

- Output modules and processes

- Interconnections between output media

6. Tuning data

- Tuning and calibration

- Where to calibrate or tune

- Methods

- Dealing with disturbances

- Noise

- Spurious data

- Loss of signal

- Invalid numbers

7. Structure of the interactive system

- Time and memory

- Time

- Memory

- Data as material

- Patterns in control data

- Recalling presets and recorded data

- Program structure and concepts

- Modules within a program

- Processing

- Data structures

- Compositional structure