News - page 3

-

Proceedings ICLI online

The proceedings of the International Conference on Live Interfaces are now online!

My colloquial paper “Mapping the question of mapping” can be found there along with a recording of the presentation.

-

Call open for work session at Splendor in August

The call for the last work session planned within the trajectory for the book writing is open!

The work session will take place at Splendor on August 19, 20 and 21, 2020.

The deadline for submitting an application is August 7th.

> See here for all the information on the worksession.

In collaboration with Splendor we will ensure that we can provide a safe space according to the regulations to prevent spreading Covid-19.

-

Reflection on online worksession

The worksession ‘Mapping my Mapping’ at V2_ was moved online due to the COVID-19 crisis. Moving it online meant that I could also take in some participants, who were originally planning to take part in the work session at NOTAM, Oslo.

The artists that took part were:

We analysed the works of these artists (that they chose) and shared our insights.

There were two additional artists, who listened in and contributed with questions in the discussion:

Working online

It was a bit of a challenge to change the format of the work session to an online format. I rescheduled the material a bit, so that the work session would take place over the course of four days, rather than three. And these days were also with some time in between. Then we alternated between:

- watching prepared videos

- discussion sessions online together to ask questions ans present work done

- individual working sessions, with an option to get back to me, as the host of the work session to discuss one on one.

The main aim was to not make it too heavy (being online for whole days), by using prepared video materials that everyone could watch on their leasure, and still have the interaction that would otherwise happen during a physical workshop. For the discussion parts I think that worked well. As a worksession host, I kind of missed being able to peek over people’s shoulder to see what they are doing and then helping along. But the one-on-one sessions helped a little bit in this.

To understand the works that we discussed, it was hard to get a full understanding of them, without seeing and experiencing them in action.

As tools, we initially started with using BigBlueButton, an open source platform, but then on the last two days reverted to Zoom and Jitsi, as the server that we were using had some issues. Using BigBlueButton did set the tone for our worksessions though. Rather than continuously using the video, we made use of the tools provided in that platform (sharing PDFs of presentations rather than screenshare), and also the limitations (focusing on audio, supported by chat). This created a space where there was a lot of room to listen to each other.

Mads, one of the participants reflects:

It was actually both a quite relaxing and focused way of working in my opinion. It wasn’t necessarry to spend as much social energy as an IRL work session and the videochat etiquette of muting microphone and or video when not speaking really means you can listen a bit more deeply while letting your guard down as well so to speak.

Ari, another participant, reflects:

What worked well for me in the online format were the online and offline elements, to watch an introduction video, then meet online, having an offline work session for yourself and then re-meeting online was a good construction. It made it well possible to focus on working and develop something. Also the amount and distance between the days worked good for me.

What I found a bit more difficult in the format was that it stayed more passive due to being muted and invisible in the online environment a lot. This makes it of course a bit harder to really meet each other and also a bit more difficult to follow the projects. I felt more hesitation to really ask in depth about the projects, as I felt there is not enough time and it might be irrelevant. But I guess I am also just missing the physical more subtle communication, so that is just an online problem. Of course in a physical situation you can grasp much more of a project as well, already by just seeing and touching it.

The projects

The projects were diverse, ranging from different music performance instruments, to systems to create interactive installations. During the work session, the perspective on what these projects were, also changed.

Tejaswinee Kelkar - Mapping with the voice

Tejaswinee performance practice is about using the voice as a driving force behind different resonators. She plays with distortion of the voice in a very physical sense, as she is using vibrotactile loudspeakers that are attached to objects, than then resonate with the sound of the voice. Feedback is introduced in this setup, by then also picking up the sound from these objects again with piezo microphones. In addition she uses Myo armband sensors to manipulate the sound processing.

Rafaele Andrade - Knurl

Knurl, a ‘cello’ with 16 strings, is a shift into exploring the potential of hybrid instruments (acoustic-electronic) to be enhanced through built-in electronic components, as well as the potential for music to be a shared endeavour between performers, and global audiences members and its networks. Knurl is solar-powered, reprogrammable (performers and audiences can interact and manipulate) & hybrid cello. Its 4 modes of performance (Synthesis, Detection, Programming & Analogue mode) are installed in a microcontroller in a self-contained electronic circuit, (all the electronic devices are attached to it, including speakers, microphone, microcontroller, solar panels and sensors).

The instrument has quite a few elements that interact together, and everything about the instrument is custom made for it. There are capacative touch sensors used both as buttons and as analog sensing inputs, solar panels that are used both to power the system, and as analog sensing inputs, piezo pickup microphones to sample the sound from the strings, mounted on the bridge. The sound the instrument produces is emitted from the instrument itself with four speakers.

The work session helped to make the mappings between the elements more clear, by doing a close analysis of each of the subsystems.

Ariane Trümper - What we want to hear

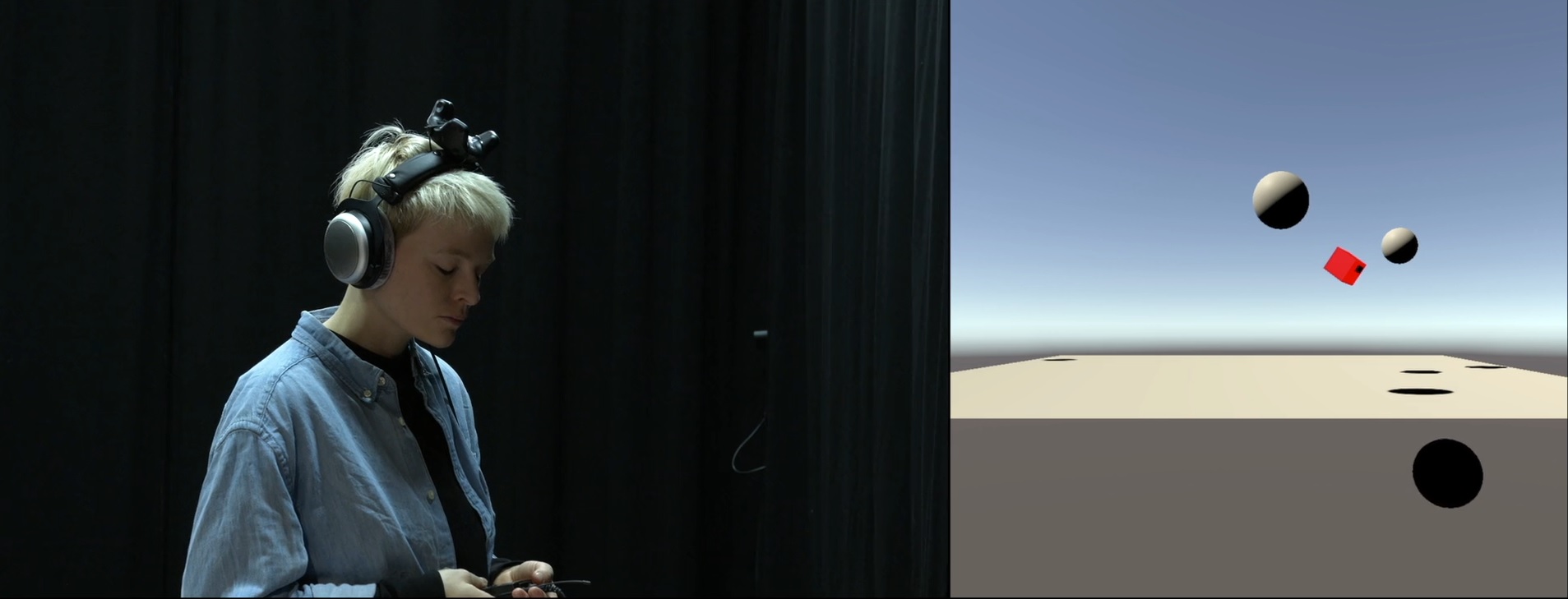

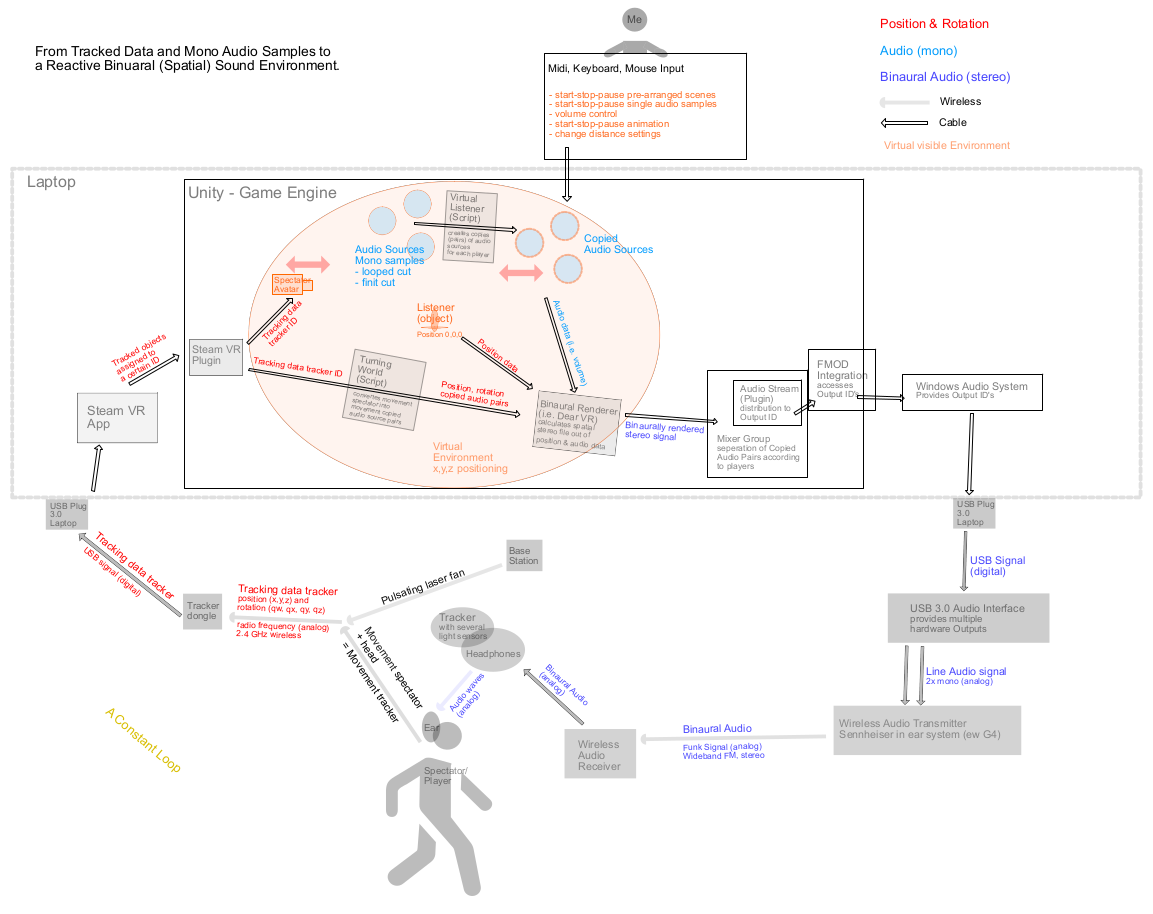

Ariane, with a background as a scenographer and media artist, works on developing a stage that is not seen visually, but heard. She works on a setup that makes use of head-tracking and position-tracking, in which the binaural sound scape listeners hear over headphones, is based on their position and orientation in the space. In the setup she created, she aims to have up to 4 participants (listeners) at a time, rather than having a solo experience.

To realise this she makes uses of a virtual environment created in Unity, with a binaural rendering plugin from dearVR and the SteamVR plugin. The tracking of the listeners is done with HTC Vive trackers and base stations.

When she started out in the work session she already had quite detailed drawings of how she connects the different elements, from her laptop, to the headphones and trackers. During the work session, she got the chance to focus deeper on the actual signal flows going through this system. Usually working with consumer electronics and redefining their functions for her installations, mapping this way, allowed her to further dive into the build of such hardware and to increase her ability to think of further alternatives and appropriations.

Mári Mákó - Schmitt

Schmitt is an instrument designed for a 20 minutes long quadrophonic solo live-electronic music performance. The piece meant to challenge and explore the relationships between motion, gesture and music in a multi-channel sound system. The instrumentation is focused on a self-built Schmitt oscillator (hence the title) which sound transformation is the sonic journey of the piece. The transformation also reflects on the narrative of the piece, as it is about overcoming existential crisis and rebirth.

The control mechanism of the signal processes happens with accelerometer sensor to emphasize and strengthen the gestural choreography with the Schmitt oscillator. The oscillator works with light sensors, completing the relationship between movements and shadow. Along with that the sound source’s signal is being projected into a multichannel-speaker setup representing its liveliness and its journey throughout the piece while it is simultaneously contrasted with drone textures.

The Schmitt oscillator has two light dependent resistors which determine its pitch and rate. The audio is then further processed digitally changing its timbre, controlled by an accelerometer that also changes the spatial position of the sound.

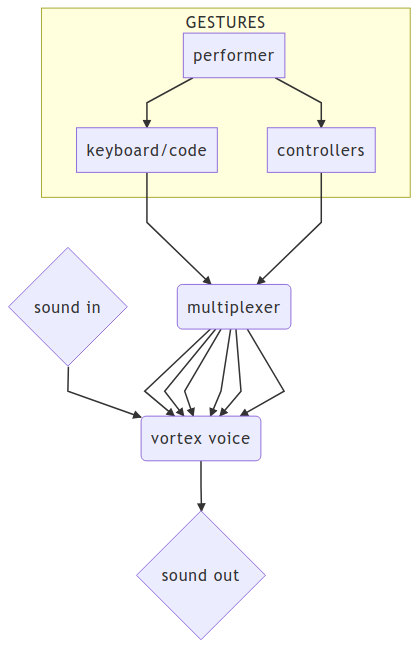

Mads Kjeldgaard - Vortex

Vortex is an open source computer music system for improvisation and composition. It’s inspired by reel to reel tape recorders, cybernetic and feedback music, as well as more contemporary generative systems. At its core it has a complex web of sound processes divided in voices, all of them interconnected in feedback paths and outputting their sound to digital looping varispeed tape reels. The name is meant to illustrate the fact that there is a continuous, turbulent flow and that the sounds and movements of the performer disappear in this fluid motion.

The system is not necessarily tied to always the same physical controllers, but instead uses an approach in which different physical controllers can be hooked up to it.

The work session really helped to explain the complexity of the system better, and how the different processes are interconnected. This in turn helped to find some new approaches for mixing the different signals (LFO’s and controls linked to physical controller inputs) that affect the same parameters on a voice.

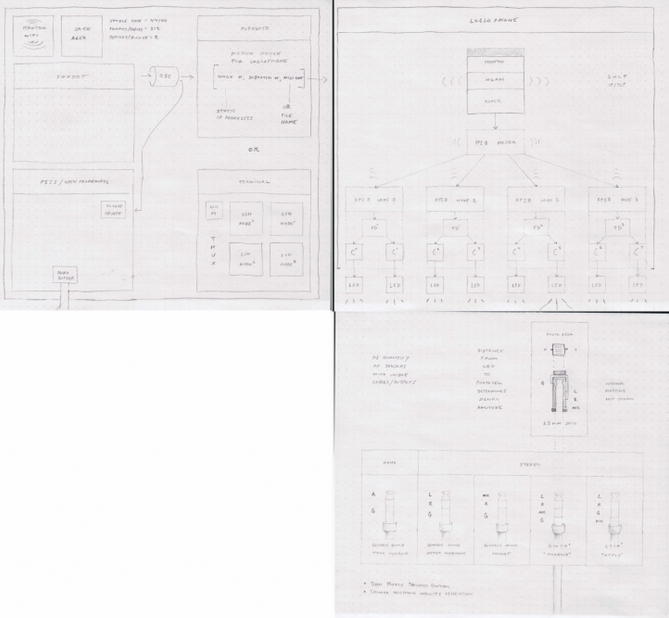

Luke Demarest - the Loglophone

The Loglophone is a musical interface that uses the playback of sound files through LEDs using pulsewidth modulation and remixing different sounds by making use of a light diode that is directly connected to an audio input channel (of goes directly to headphones).

Throughout the work session, Luke realised that his approach for this instrument, and its conceptualisation is much stronger connected to the initial concept of an installation. This is connected perhaps to his background as a visual artist, rather than as a musician.

The instrument is self-contained in that the software producting the sound wave forms that are sent out to the 8 LEDs is running on four parallel Raspberri Pi’s mounted inside the instrument. The instrument can then be controlled by a livecoder from Foxdot, and in addition visuals are produced.

-

Work session online

The work session that was planned at V2_ is moved online. Some of the participants that had signed up for the NOTAM work session that was cancelled, are now also taking part in this session.

I’m looking forward to get to know the different projects of the participants in detail!

subscribe via RSS